Reading time: 9 mins 11 secs

Today we will talk about common Robots.txt issues in this article.

Are your blog posts not getting crawled on intake the way you want them to?

But do you know the reason behind this?

To a large extent, the reason behind this can be the Robots.txt file.

Do you know what the Robot.txt file is?

What are the benefits of Robots.txt? How can we add a Robots.txt file in Blogger and WordPress?

It has often been seen that any new blogger has to face many problems.

Due to not having in-depth knowledge of the Robots.txt file.

According to digital marketing experts, the Robots.txt file is a small text file form of all the website posts available on the internet.

This file is specially created by looking at the search engine and SEO.

Robots.txt file helps a lot in posting your website.

Despite this, there are many bloggers who do not understand its real value.

If you are also one of those bloggers, then telling the truth is not good for your website.

If you want your website to crawl well in search engines like other bloggers’ websites.

Then you should read all the information related to Robots.txt in this article.

In this complete article, you will get in-depth information about Robots.txt, in which we know what is robots.txt. Why Robots.txt file is important for SEO.

This article is going to be very helpful for you, so do not miss a single point of it.

First of all, we will know what is Robots.txt file.

So let’s start

Read This: New Generic Top-Level Domains List

What Is A Robots.txt File?

The robots.txt file is also called the robots exclusion protocol in simple language.

This is a text file, due to this file Robots.txt Google web robot gets to know “what is crawlable in your post and what is not”.

Through this, every command given by you is sent to the search engine.

At present date, there are many bloggers who do not yet know exactly what is Robots.txt file.

You must be guessing from its name that it is an extension of the text file in which you can write only text.

If we try to understand it in a more simple language, then if you want to show any page from your blog in the search engine.

If you want that your page doesn’t show in the search engine, then you can do all these things by Robots.txt.

By doing this, the search engine starts indexing your post, and it also knows what to show and what not to show.

The biggest advantage of this is that your blog post becomes SEO-friendly.

Due to this, the search engine can easily understand which part to intake and which part to skip.

What Is Robots.txt In SEO?

We just went above what is Robots.txt file, but now we understand what is Robots.txt file SEO and why it is important.

Robots.txt file plays a very important role in technical SEO.

While doing SEO of your post, you should keep in mind that you must add Robots.txt to it.

Because when you submit you once in Google Search Console on the site of your post, then after Google you come to your post.

When Google bots come to your post and if they do not find Robots.txt file there.

Then in that condition search engines start crawling and indexing the entire content of your website.

Because of this your content also gets indexed which you do not want to be indexed.

There is no SEO impact in this process, indexing the content with Google’s crawler instruction, so a Robots.txt file is used.

Read This: 7 Tips For Achieving Successful SEO Engagement

Why Are Robots.txt Files Important?

When search engine bots come to our website or blog, they follow the Robots.txt file.

Only after that do they crawl the content of your website.

But if your website does not have a Robots.txt file.

Then the search engines will start indexing and crawling all the content you do not want to index.

If we do not give instructions to the search engine bots through this file, then they index our entire website.

Apart from this, some such data on our website get indexed which you do not want to be indexed.

Bots in search engines Before indexing any website, look in the robot’s file.

When they do not get any instruction from this file, then they start consuming all the content of the website.

But if any instruction is found, then follow this file and index the website.

11 Common Robots.txt Issues

There are many such issues in the Robots.txt file, but here we will talk about those issues which are mostly seen.

- 1. Missing Robots.txt

- 2. Adding Disallow To Avoid Duplicate Content

- 3. Use Of Absolute URLs

- 4. Serving Different Robots.txt Files

- 5. Adding Allow vs Disallow

- 6. Using Capitalized Directives vs Non-Capitalized

- 7. Adding Disallow Lines To Block Private Content

- 8. Adding Disallow To Code That Is Hosted On A Third Party Websites

- 9. Robots.txt Not Placed In Root Folder

- 10. Added Directives to Block All Site Content

- 11. Wrong File Type Extension

1. Missing Robots.txt

When any website does not have a Robots.txt file, the search engine does not know what to crawl and what not to crawl from that website.

Because of this bots also crawl and indexes robots’ meta tags, x robots tags HTTPS headers.

The best way to solve this problem is to use the Robots.txt file on your website.

The biggest advantage of using this is that Google gets to know what things to crawl and intake on your website.

If no such file is available on your website, then Google crawls and indexes your entire content on your website.

Note: The crawling budget is provided by the search engine for any website.

If we use that crawling budget in the right way then all our posts will be crawled easily.

Otherwise, if we spoil our Robots.txt crawl delay budget in anything, then our main pages and posts will never be indexed.

2. Adding Disallow To Avoid Duplicate Content

It is very important to have proper crawling on your website.

You should never block your content by Robots.txt.

If you have to block your content then you can take the help of Canonicals in this.

Read This: 29 Best Youtube Channel To Learn SEO

3. Use Of Absolute URLs

Directives are valid only for one particular path in the robots.txt file.

Websites that work on multiple subdirectories should use absolute URLs.

4. Serving Different Robots.txt Files

One still does not come under the recommendation that you serve different types of Robots.txt files for your user agent.

It is very important for any website to understand that it has to use the same Robots.txt on its website, whether that website is national or international SEO.

5. Adding Allow vs Disallow

It is not necessary for any website to add robots.txt to allow all in its directive.

Allow directives are then used only when Robots txt disallow domain directives are used too much for the same Robots.txt file.

That’s fine when you use Robots.txt to disallow all directives on your website.

But when you use allow directives then you should add many attributes on your website to identify them all separately.

6. Using Capitalized Directives vs Non-Capitalized

You always have to keep this thing in your mind that all the directives in the Robots.txt file are case-sensitive.

There are many CMS platforms where the URL is automatically set, in which both upper case and lower case are included.

7. Adding Disallow Lines To Block Private Content

When you add disallow to your Robots.txt file.

It is a matter of very big security risk in which it can be easily identified your private and internal content which you have stored on your website.

You should use server site authentication on your website to block your private content.

It is very important that you use this thing.

8. Adding Disallow To Code That Is Hosted On A Third Party Websites

If you want your content to be removed from any third-party website.

For that, you will have to contact the webmaster of Google.

And request to remove your content from that third-party website.

Because of this, it has been seen many times here that errors start coming.

Due to this, it becomes very difficult to understand from which source server any specific content is coming.

9. Robots.txt Not Placed In Root Folder

You always have to keep in mind that your Robots.txt file is always placed in the topmost directory of your website, along with the subdirectories.

For this, you have to make sure that you have not placed your Robots.txt file with any such folders and subdirectories.

10. Added Directives to Block All Site Content

When a site owner develops his website, the default Robots.txt file is automatically triggered by him.

After which the entire content of your website gets blocked.

This happens when you apply it to your entire website.

But for this, you should use its reset option.

11. Wrong File Type Extension

You will get the answer to this thing in your Google Search Console.

After that, you can create your file, in which you can use the Robots.txt tester to validate it.

You always have to keep in mind that your file is always .txt and there it is made in UTF-8 format.

Read This: How To Use News Topic Authority To Find High-Quality News

Create A Robots.txt File?

You should use the Robots.txt generator to create the Robots.txt file.

- – You should first name your Robots.txt file

- – Then after that, you should add a rule in your Robots.txt file.

- – You should upload the Robots.txt file on your website.

- – After that, you have to set your Robots.txt file should be tested.

Test Your Robots.txt?

You should follow these steps to test your Robots.txt file

- – First of all, you should go to the tester tool.

- – After that, you should type the URL of your website page in the text box.

- – You should select the user-agent.

- – After that, you should click on the Test button.

Robots.txt specifications

Robots.txt has many specifications such as:

- – Caching

- – File Format

- – Syntax

- – User-agent

- – Robots.txt Sitemap

- – Allow and Disallow

- – Robots.txt example

- – Handles errors

- – Handling HTTPS status codes

- – Grouping of lines and rules

- – File location

- – Range of validity

Robots Exclusion Protocol

We also call it robots exclusion standard or Robots.txt in simple language.

This is such a standard that any website can be used by a web bot.

Crawls and indexes via.

By this robots txt standard, we also tell that part of our website to the web bot that this address is not to be done.

What Are The Advantages Of Robots.txt?

Now we know deeply examine the advantages of Robots.txt.

- – First of all, Robots.txt tells the search engine bot which part of the content should be crawled and which part should not be crawled.

- – Secondly, it is very useful in improving any website with useless SEO.

- – Thirdly, that makes any section of the website private.

- – Fourthly, the search engine gives instructions on what to crawl and index the content in the proper way.

- – Lastly, by this, the low-quality page of any website can be blocked.

Important Concepts Related To Robots.txt

Now let me tell you about some important things related to Robots.txt which are used in it.

- – When you will allow anything inside your text file, it means that you want the part to be shown in the search engine.

- – If you disallow any of your posts, it means that you do not want them to appear in the search engine.

- – You can show pages to the people visiting your blog with the help of the Robots.txt user agent that you want to show them.

- – You can hide your Adsense code from visitors by using user-agent: Media partners Google.

Read This: How To Create Robots.Txt File In SEO

How To Add Robots.txt In Blogger

Due to the recent new update in Blogger, the way of adding the Robots.txt file has changed a bit.

First, you have to go to the setting, then there you have to allow custom-enabled Robots.txt in the setting.

Whenever you want to make your blog SEO-friendly, then Robots.txt is used at that time.

But most people leave it that way.

But let us tell you here that if you have migrated your blog from Blogspot to WordPress, then you must use this feature.

In this way, you will be able to easily use the Robots.txt files in your blogger very easily.

I would also like to give you an opinion here that if you are a blogger but you do not want to use this feature.

Yes, but if your website is on WordPress then you can definitely use this feature.

How To Setup Robots.txt File In WordPress?

If you use WordPress then you must have known rank math or Yoast SEO plugins.

And I also believe here that you must have installed them on your WordPress website.

Here I use Rank Math plugin for SEO on my WordPress website.

But this process is the same for both plugins.

First of all, to set up Robots.txt, click on the tools of these plugins on your website and press the button file editor.

Now here you can copy and paste the sitemap of your website and again press the changing robot text button.

After this, your Robots.txt file will be easily added from WordPress.

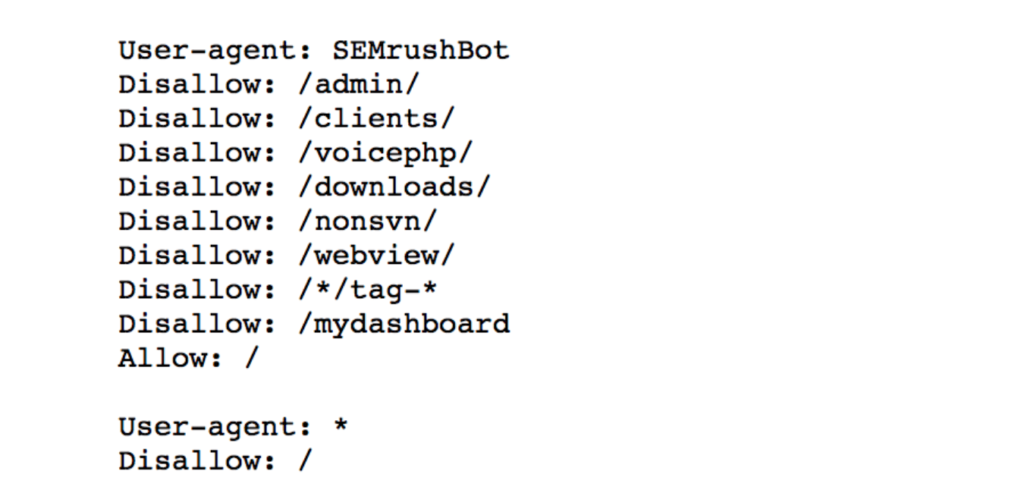

robots.txt disallow all example

User-agent: *

Disallow: /

failed: robots.txt unreachable

- Incorrect robots.txt file location

- File permission issues

- Server misconfiguration

- Content Delivery Network (CDN) issues

- URL or DNS issues

- Firewall or security settings

- Website migration or redesign

Read This: Are Google Page Speed Insights Accurate Or Misleading?

Conclusion

Today in this article we have talked specifically about common Robots.txt issues.

We saw here in this entire article that the Robots.txt file can take our website to the top.

But we should use it properly on our website, otherwise, it may have a wrong impact on our website.

There are many Robots.txt issues that apply to our website without our knowledge.

That’s why we should keep auditing our website from time to time.

Have you ever had a Robots.txt issue?

Has your website also been impacted because of that?

So today you should apply on your website by paying attention to all the points in the article.

If you liked this article, then share it with your friends and also comment below.

You can also read my other articles.

Read Also

- Top 65 Technical SEO Interview Questions And Answers

- Does Bold Text Help SEO

- How To Create The Perfect H1 Tag For SEO

- Google Announces Five Changes Coming To Mobile Search

- Benefits Of Using Semrush

FAQ

Is robots.txt vulnerable?

The text file itself is not vulnerable. A file called robots. text is for web robots.

Is robots.txt obsolete?

In 2019, Google made the announcement that it would no longer honor robots.txt directives to prevent indexing.

How robots.txt could lead to a security risk?

There are dangers associated with discussing these sensitive places in an accessible document, even if they might not actually pose a security concern in these circumstances.

What should you block in a robots.txt file and what should you allow?

In essence, it operates by diverting crawlers away from specific information and stopping them from accessing it. To prevent Google from crawling private photos, expired special offers, or other pages that you’re not ready for users to view, you might wish to block URLs in your robots.txt file. It can aid SEO efforts if you use it to deny access to a URL.

What is the maximum size limit of robots.txt in Google?

A robots.txt file size limit of 500 kibibytes (KiB) is currently enforced by Google. Any content that exceeds the file size limit is disregarded. The robots’ size can be decreased.

What is the risk of using robots?

Exposure to fresh dangers like lasers and electromagnetic radiation, etc. accidents that may happen because of a lack of information, comprehension, or control of robotic work operations.

![Read more about the article How Many Outbound Links Per Blog? [Updated Study]](https://seowithsunny.com/wp-content/uploads/2023/10/M-40-1005x628.jpg)